|

|

|

From Fig. 15 it is seen that level 2 processor dead-time is a leading source of loss in our system. Several people have suggested that this loss could be reduced by a pre-level-2 decision based simply upon a count of lead glass blocks over some threshold. This idea was tested, first by Phil Rubin and then by Elton Smith and Kyle Burchesky[1], using data collected during the June period. The conclusion is that this simple criterion rejects 95% or more of the level 0 triggers, while preserving almost all of what the level 2 processor would keep. In Table 1 of their report, Elton and Kyle show that for a particular choice of threshold, more than 97% of the level 0 triggers are rejected while 98% of the events with MAM values of 64 or higher (the level 2 threshold used during the June period) are preserved.

The way that this would work is as follows. The adc modules connected to

the lead glass detector produce a logic signal on the Fastbus auxiliary

backplane a short time after the integration gate has closed. This signal

is the output of a comparator between the charge on the integrator for

that channel and some programmable level. A fast OR of the outputs from

all instrumented blocks in the wall could be formed, and a fast-clear could

be formed if the OR signal is absent. Those familiar with the adc say

that it could be ready for the next event as soon as 250ns after the

fast-clear is received. The LeCroy 1877 multihit tdc specification gives

290ns as the required fast-clear settling time, but the 1875A high-resolution

tdc that we use the digitize the tagger and RPD signals requires 950ns

between the fast-clear and the earliest subsequent gate.

Therefore the earliest that the acquisition could be re-enabled following

a fast clear from a level 1 decision would be 1.2![]() from the receipt

of the level 0 trigger. This is equivalent to a 10-fold reduction in the

level 2 dead-time.

from the receipt

of the level 0 trigger. This is equivalent to a 10-fold reduction in the

level 2 dead-time.

In order to incorporate this improvement into the model, it is necessary

to break up the level 1 passing fraction ![]() of 97% into a

passing fraction for signal and a part for nonsignal, similar to how

it was done in Eq. 14 for

of 97% into a

passing fraction for signal and a part for nonsignal, similar to how

it was done in Eq. 14 for ![]() .

.

Another area where losses may be reduced is in the data acquisition dead-time.

Phil Rubin and David Abbott have found a way to reduce the time it takes

to read out and store an event from the 670![]() s observed during 1998

running to less than 300

s observed during 1998

running to less than 300![]() s. Being cautious, I have taken 300

s. Being cautious, I have taken 300![]() s

as the new estimate for readout dead-time per event

s

as the new estimate for readout dead-time per event ![]() . With

the level 1 trigger and data acquisition speed-up taken into account,

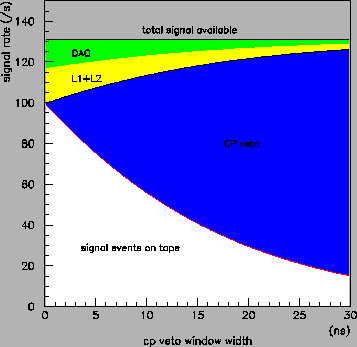

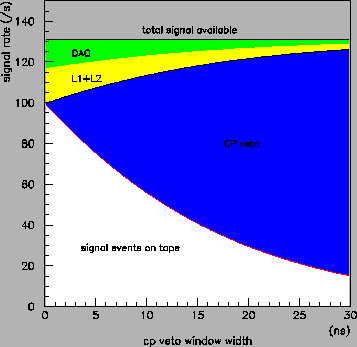

instead of Fig. 15 we now have Fig. 16. Fixing

the value of

. With

the level 1 trigger and data acquisition speed-up taken into account,

instead of Fig. 15 we now have Fig. 16. Fixing

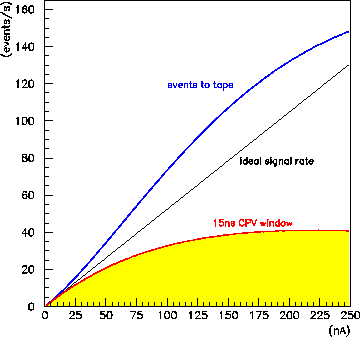

the value of ![]() at 15ns (minimum value for a fully efficient veto),

the yield of signal vs. beam intensity is shown in Fig. 17.

A significant improvement has been obtained over the situation in 1998

shown in Fig. 12 but we are still not in a position to make

effective use of beams much over 150nA.

at 15ns (minimum value for a fully efficient veto),

the yield of signal vs. beam intensity is shown in Fig. 17.

A significant improvement has been obtained over the situation in 1998

shown in Fig. 12 but we are still not in a position to make

effective use of beams much over 150nA.