|

|

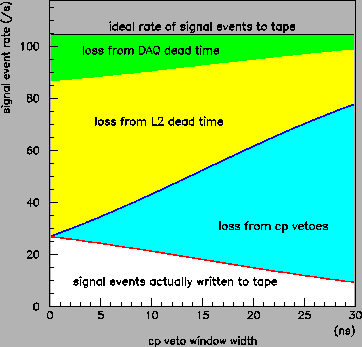

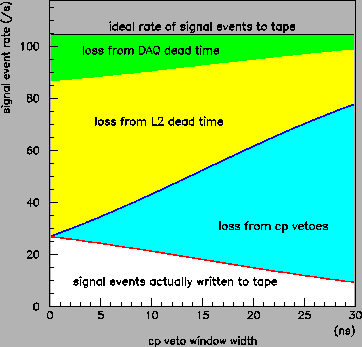

Supposing then that the model is giving correct results, what part of

the electronics chain is producing the majority of the losses? The

answer to this question is illustrated in Fig. 15. Depending

upon what value one takes for the width of the CP veto window, the losses

are shared more or less equally between random CP vetoes and dead-time

associated with the level 2 processor, with data acquisition contributing

an additional 10-15%. Recall that there is a restriction on the validity

of the model that ![]() ns. Reducing

ns. Reducing ![]() below this bound

will introduce a leak in the CP veto and cause the losses from the level

2 processor and DAQ to increase faster with decreasing veto window width

than is shown in the plot. Fig. 15 actually includes extra

losses at level 2 from some broken adc channels that generated significant

processing overhead during the June period, which explains why this figure

looks somewhat worse than the situation at 250nA in Fig. 12 where

the broken channels have been removed. Nevertheless the conclusion from

this figure is correct that the CP veto and the level 2 processor are

primarily responsible for the losses in our present setup.

below this bound

will introduce a leak in the CP veto and cause the losses from the level

2 processor and DAQ to increase faster with decreasing veto window width

than is shown in the plot. Fig. 15 actually includes extra

losses at level 2 from some broken adc channels that generated significant

processing overhead during the June period, which explains why this figure

looks somewhat worse than the situation at 250nA in Fig. 12 where

the broken channels have been removed. Nevertheless the conclusion from

this figure is correct that the CP veto and the level 2 processor are

primarily responsible for the losses in our present setup.

| parameter | value | method | |||||

|

|

/s/nA | fit to scaler data | |||||

|

|

/s/nA | fit to scaler data | |||||

| /s/nA | fit to scaler data | ||||||

| /s/nA | fit to scaler data | ||||||

|

|

s | fit to scaler data | |||||

|

|

s | fit to scaler data | |||||

|

|

s | fit to scaler data | |||||

|

|

s | fit to scaler data | |||||

|

|

s | measured on scope | |||||

|

|

s | measured on scope | |||||

|

|

measured at low rates | ||||||

|

|

measured with veto in/out | ||||||

|

|

fit to scaler data | ||||||

|

|

fit to scaler data | ||||||

A complete list of the model inputs used to generate the figures in this section is given in Table 2.1. Many of these numbers changed as timing, thresholds and gains were adjusted throughout the run period. A few were sensitive to the quality of the beam tune. The numbers given in the table are the ones that describe the situation during the intensity scan taken at the end of the June period.