Difference between revisions of "How to Monitor Jobs"

| (2 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | == | + | == Check the state of the job == |

| − | + | === Check the jobs of a specific user === | |

Once jobs have been submitted to the cluster, monitoring can be performed using the following command in a terminal | Once jobs have been submitted to the cluster, monitoring can be performed using the following command in a terminal | ||

| Line 10: | Line 10: | ||

This will display | This will display | ||

| − | *the process ID | + | *ID: the process ID |

| − | *the owner of the job | + | *OWNER: the owner of the job |

| − | *the date and time it was submitted | + | *SUBMITTED: the date and time it was submitted |

| − | *how long it has been running | + | *RUN_TIME: how long it has been running |

| − | *its current status (run, held, idle) | + | *ST: its current status (run R, held H , idle I) |

| − | *the job size | + | *SIZE: the job size |

| − | *program name | + | *CMD: program name |

This is useful to monitor your own jobs to check on their status. | This is useful to monitor your own jobs to check on their status. | ||

| + | === Check all jobs === | ||

| + | If you want to see all of the jobs in the queue | ||

| + | |||

| + | <pre> | ||

| + | condor_q | less | ||

| + | </pre> | ||

| + | |||

| + | == Held job? == | ||

| + | If you check the status of your job and find it's been held, there are a couple possibilities. | ||

| + | |||

| + | === Memory thrashing === | ||

| + | Your job is using more memory than you requested and is thus causing the server to use swap (hard disk) instead of memory. This will thrash the system and is not good. A script is run periodically to check for such jobs. If a job is found that thrashes the machine, the job is held. | ||

| + | |||

| + | Check your log file for a particular job ID and find the point where it says that your job was held and that it was checkpointed. It should list the disk and memory your job used and requested. If the disk is significantly larger than requested, this job was using swap. | ||

| + | |||

| + | === Submitted too many jobs === | ||

| + | The cluster can only process so many jobs in the queue. If you submitted tens of thousands of jobs, they will be held. Our cluster cannot handle negotiating so many queued jobs at one time. | ||

| + | |||

| + | Never submit more than 5,000 jobs at a time. Remember, other users might also submit a lot of jobs too. | ||

| + | |||

| + | == Check which machine the job is running on == | ||

Another useful command is <i>condor_status</i> which can tell you information about the cluster machines | Another useful command is <i>condor_status</i> which can tell you information about the cluster machines | ||

<pre> | <pre> | ||

| − | [username@computer ~]$ condor_status [-r] | less | + | [username@computer ~]$ condor_status [-r] | grep stat | less |

</pre> | </pre> | ||

| Line 28: | Line 49: | ||

If there are any concerns about a specific job, please contact the main administrator. | If there are any concerns about a specific job, please contact the main administrator. | ||

| + | |||

| + | == Check the job as it runs == | ||

| + | === Check stdout === | ||

| + | If a job is running you can execute the following command to see the tail of the stdout file | ||

| + | |||

| + | <pre> | ||

| + | condor_tail <job_id> | ||

| + | </pre> | ||

| + | |||

| + | === Check stderr === | ||

| + | If you want to see if there are any errors you can run the following | ||

| + | |||

| + | <pre> | ||

| + | condor_tail -no-stdout -stderr <job_id> | ||

| + | </pre> | ||

Latest revision as of 23:03, 24 February 2017

Check the state of the job

Check the jobs of a specific user

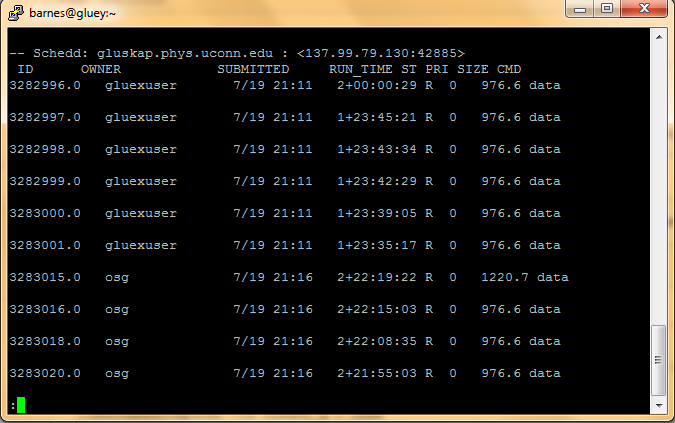

Once jobs have been submitted to the cluster, monitoring can be performed using the following command in a terminal

[username@computer ~]$ condor_q -submitter <username>| less

This will display

- ID: the process ID

- OWNER: the owner of the job

- SUBMITTED: the date and time it was submitted

- RUN_TIME: how long it has been running

- ST: its current status (run R, held H , idle I)

- SIZE: the job size

- CMD: program name

This is useful to monitor your own jobs to check on their status.

Check all jobs

If you want to see all of the jobs in the queue

condor_q | less

Held job?

If you check the status of your job and find it's been held, there are a couple possibilities.

Memory thrashing

Your job is using more memory than you requested and is thus causing the server to use swap (hard disk) instead of memory. This will thrash the system and is not good. A script is run periodically to check for such jobs. If a job is found that thrashes the machine, the job is held.

Check your log file for a particular job ID and find the point where it says that your job was held and that it was checkpointed. It should list the disk and memory your job used and requested. If the disk is significantly larger than requested, this job was using swap.

Submitted too many jobs

The cluster can only process so many jobs in the queue. If you submitted tens of thousands of jobs, they will be held. Our cluster cannot handle negotiating so many queued jobs at one time.

Never submit more than 5,000 jobs at a time. Remember, other users might also submit a lot of jobs too.

Check which machine the job is running on

Another useful command is condor_status which can tell you information about the cluster machines

[username@computer ~]$ condor_status [-r] | grep stat | less

This will show a list of the various machine resources and if the option -r is supplied it will only show machines with running jobs.

If there are any concerns about a specific job, please contact the main administrator.

Check the job as it runs

Check stdout

If a job is running you can execute the following command to see the tail of the stdout file

condor_tail <job_id>

Check stderr

If you want to see if there are any errors you can run the following

condor_tail -no-stdout -stderr <job_id>